Virtual audio cables

This is another post about the mess that is Linux audio. To follow along you may want to read the previous one first.

The goal this time

This time I want to create a virtual audio cable. That is, I want one application to be able to select a “speaker”, which then another application can use as a “microphone”.

The reason for this is that I want to use GNURadio to decode multiple channels at the same time, and route the audio from the channels differently. Specifically my goal is to usy my ICom 7300 in IF mode (which gives me 12kHz of audio bandwidth) tuned to both the FT8 and JS8 HF frequencies, and then let wsjtx listen on a virtual sound card carrying FT8, and JS8Call listen to a virtual sound card carrying JS8.

You can also use it to have an SDR capture the 40, 15, and 20 meter bands, and create “audio cards” for every single digital mode in all these bands.

If you have a fast CPU you can capture every single one of the HF bands and do this. Though you might be limited by the dynamic range of your SDR.

Creating virtual cables

We could use modprobe snd_aloop to create loopback ALSA devices in the

kernel. But I’ve found that to be counter intuitive, buggy, and incompatible

(not every application supports the idea of subdevices). It also requires root,

obviously. So this is best solved in user space, since it turns out it’s

actually possible to do so.

Another way to say this is that any time you want to do anything with audio under Linux, you have to carefully navigate the minefield, and not waste time on the many haunted graveyards. If you stick to my path you’ll do fine. Hopefully.

My setup is that I’m using ALSA (because this is Linux), and PipeWire (because it’s the future and PulseAudio is the sucky past).

GNU Radio needs access to the audio devices for my use case, and it only supports ALSA. Sigh.

In the last post I said that PipeWire (like PulseAudio) builds on top of ALSA. This is only partly true. As I said in the end of that post ALSA can also create virtual sinks and sources on top of the devices.

So in an awesome layering violation we’ll have PipeWire create the virtual cable, and then ask ALSA to create a source and sink for it.

GNURadio will use the ALSA device, and the other applications will use the PipeWire/PulseAudio devices, because they can.

Create virtual cables

pw-loopback -m '[ FL FR ]' --capture-props='media.class=Audio/Sink node.name=ft8_sink' &

pw-loopback -m '[ FL FR ]' --capture-props='media.class=Audio/Sink node.name=js8_sink' &

These loopback commands need to continue running. They are the “drivers” for the two loopback cables. But drivers merely in user space talking to PipeWire, so no root permissions needed.

The next step is to create ALSA devices for these four endpoints (two

virtual cables with tow ends each). That can be done by adding the

following to ~/.asoundrc:

pcm.ft8_monitor {

type pulse

device ft8_sink.monitor

}

ctl.ft8_monitor {

type pulse

device ft8_sink.monitor

}

pcm.ft8_sink {

type pulse

device ft8_sink

}

ctl.ft8_sink {

type pulse

device ft8_sink

}

pcm.js8_monitor {

type pulse

device js8_sink.monitor

}

ctl.js8_monitor {

type pulse

device js8_sink.monitor

}

pcm.js8_sink {

type pulse

device js8_sink

}

ctl.js8_sink {

type pulse

device js8_sink

}

Now you should be able to play audio into ft8_sink and capture it

from ft8_monitor, and the same for JS8.

When running this test you’ll probably hear the output through your

default speakers. You may want to go to the “Output Devices” tab of

pavucontrol and mute the two loopbacks. They should still produce

sound into the virtual sources, just not play through the normal

speakers.

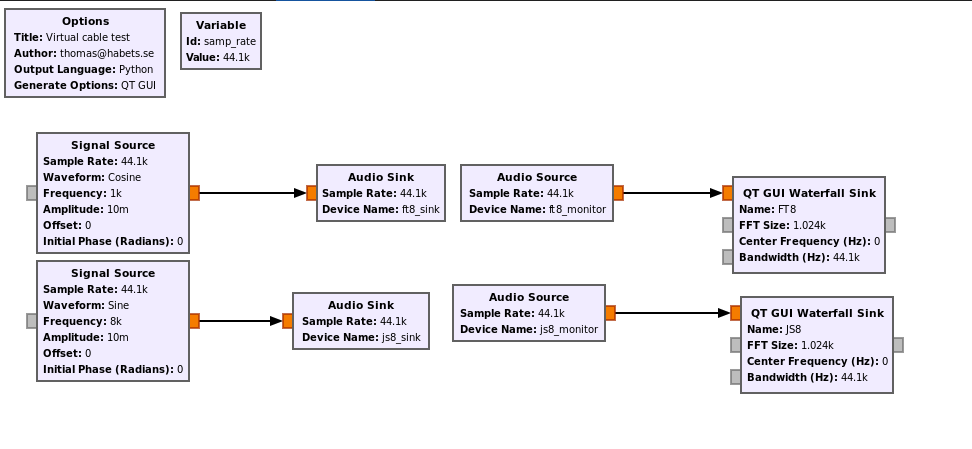

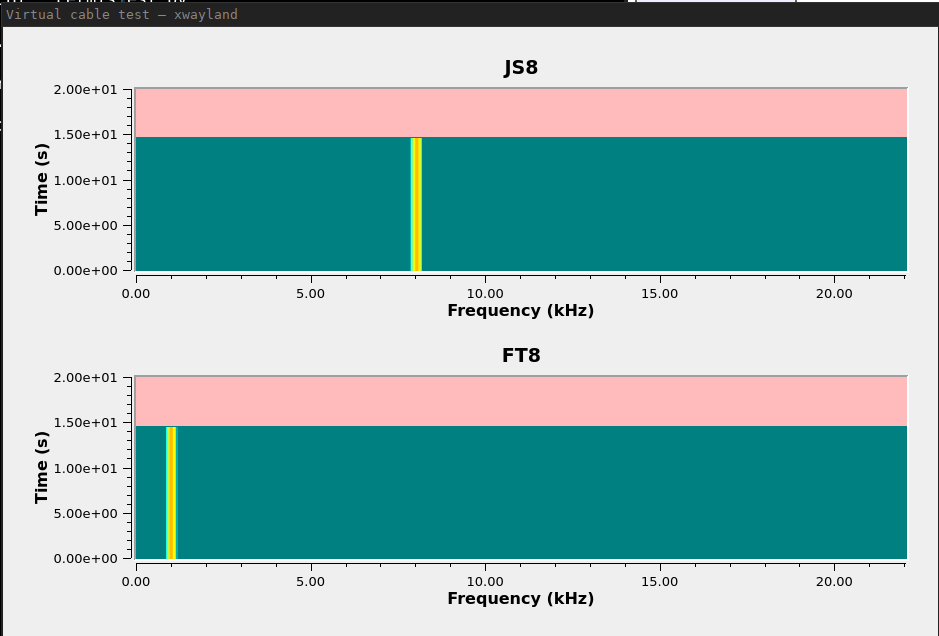

Devices created, now let’s use them

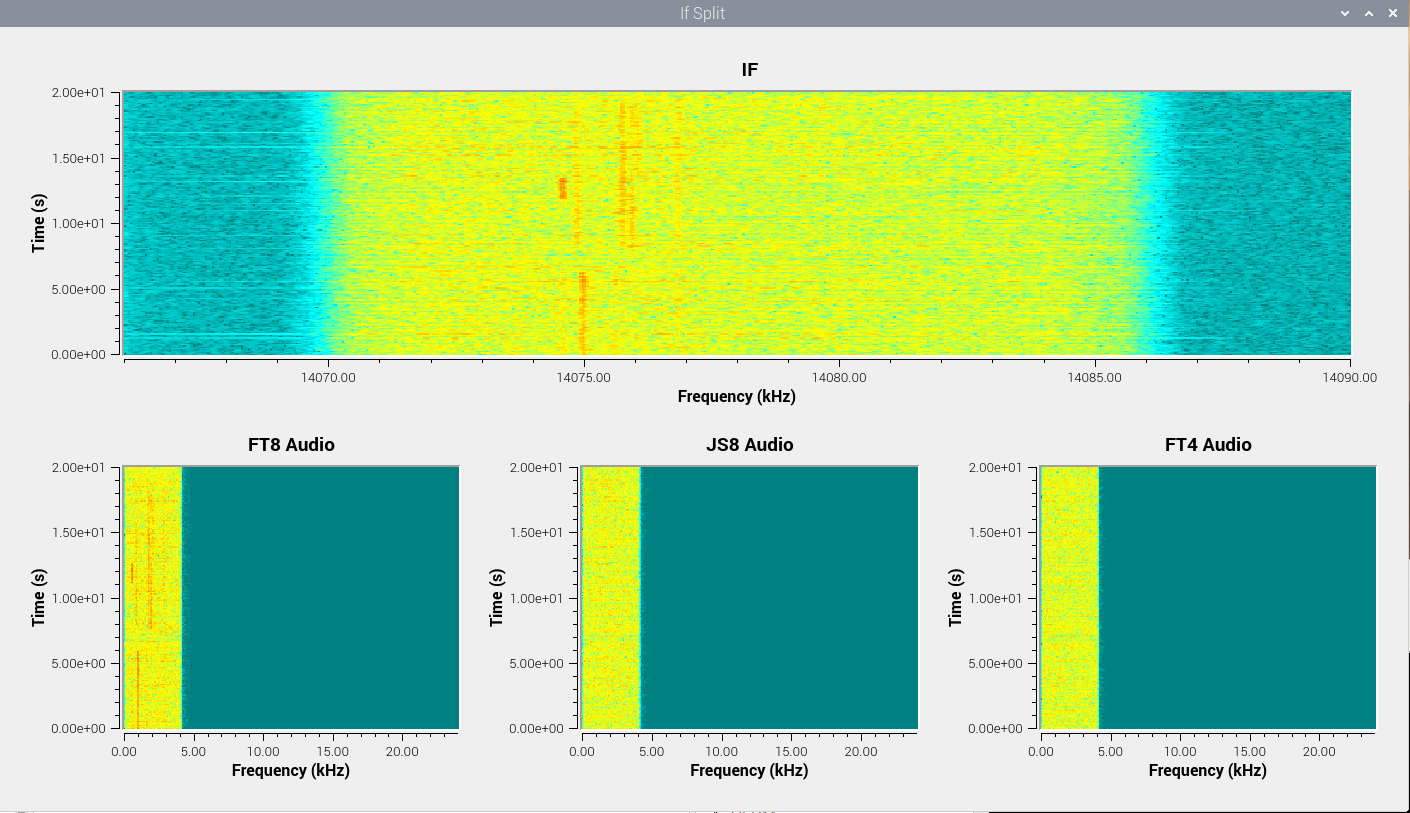

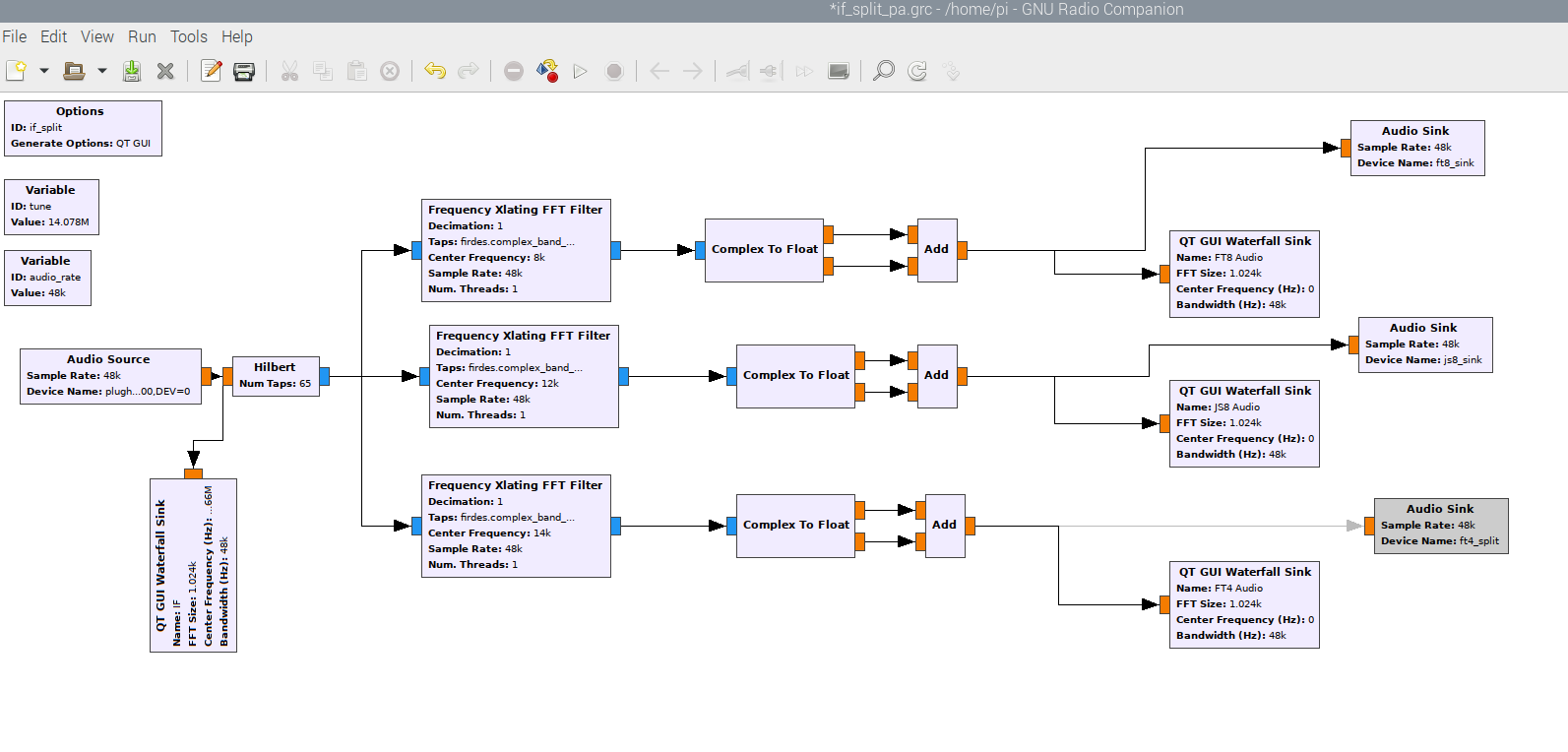

Via its USB port the ICom 7300 can send audio either as you would hear it normally (AF), or be set to send the IF data. The benefit of the latter is that you get about 12kHz instead of just 4kHz.

This mode can be configured in Set->Connectors->ACC/USB Output Select. Then for some reason you need to set the radio in FM mode. I believe this is because in other modes there’s a filter before the IF part that severely reduces the available spectrum.

Because the IF frequencies are output, this is before the FM decoder, so it’ll still work for all this that otherwise would need to be SSB.

So if we tune to 7.078MHz we’ll actually monitor both FT8 and JS8 at the same time (also FT4 at 7.080Mhz).

All we have to do is take the input signal and produce two outputs, frequency translated and filtered appropriately.

And these outputs are now available to both JS8Call and WSJTX to listen to at the same time, on different virtual audio cards, frequency translated as if they got the signal directly from the radio.

Well, that was easy.

Links

- https://wiki.gnuradio.org/index.php/ALSAPulseAudio